News & Insights

Blumberg Capital portfolio news, startup growth resources and industry insights

Mapping the AI Toolchain Bringing AI to the next wave of developers

Nov 14, 2018

The first wave of artificial intelligence has been about experts: brilliant technologists doing cutting-edge research and building advanced systems in places like Silicon Valley.

The second wave of AI will be about practitioners: traditional developers becoming AI rockstars and addressing a wide range of business problems.

Access to AI will be democratized.

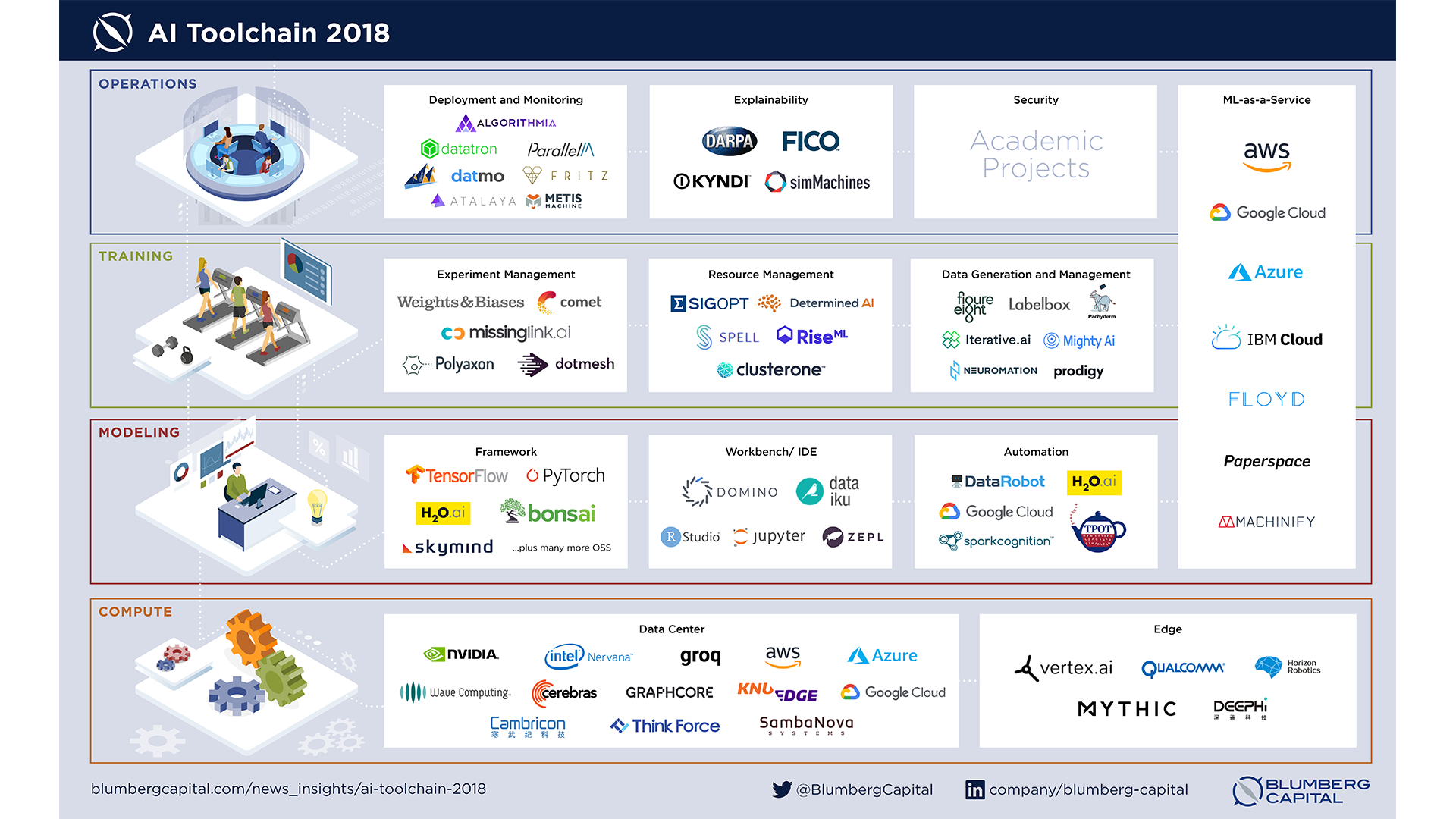

During this transition, we believe AI – particularly deep learning – will begin to resemble a general-purpose computing platform, a topic we explored in a recent Forbes article. But a new set of tools will be necessary to make that vision a reality. To advance the conversation, we are publishing an alpha landscape of the emerging AI Toolchain:

(click to enlarge; see methodology at end of post)

Why AI tooling?

Artificial intelligence has captured the world’s attention based on its promise to generate value from big data and to deliver a new breed of intelligent applications. Enterprises, venture capitalists, academic institutions and governments are betting billions of dollars – with good reason – that AI will be a competitive advantage in the coming decades.

Today’s reality, though, is that AI is in the earliest stages of development and adoption – what Kai-Fu Lee calls the “first wave.”1 A recent O’Reilly survey found that half the organizations in its data-savvy audience are still “exploring” or “just looking” at machine learning.2 That number is significantly higher among small and medium-sized organizations.3 Anecdotally, only a select few (e.g., tech giants, hedge funds, intelligence agencies) are seeing real value.

The gap between expectation and reality is driven, in large part, by the difficulty of getting AI to work in practical use cases and at scale. One ML practitioner at Lyft calls this the “primordial soup phase” of AI.4 An IDC survey found that 77 percent of enterprises have “hit a wall” with AI infrastructure, many more than once.5 This problem is made more acute by the shortage of talented AI developers and unclear ownership of AI initiatives.

Delivering on the potential of artificial intelligence will require a dramatic improvement in the systems and tools that help AI developers, from the most experienced to the newly engaged, do their jobs effectively.

Compute: The gold rush is on

Infrastructure has played a central role in the AI revolution from the start.

Landmark results in deep learning, including the 2012 AlexNet paper, relied on the massively parallel computing resources of graphics processing units (GPUs) to train networks that were larger, deeper and more powerful than previously possible.6

Fast-forward to 2018, and AI compute technologies have become an explosive area of development and investment. NVIDIA is the clear leader, powering the majority of AI training workloads and surpassing $100 billion in market capitalization. Intel is playing catch up via acquisition of companies like Nervana, Movidius and Altera, with the company’s first “neural network processor” expected in late 2019.7 The large cloud providers (AWS, Azure, Google) are developing chips as well, but so far have found only niche adoption.

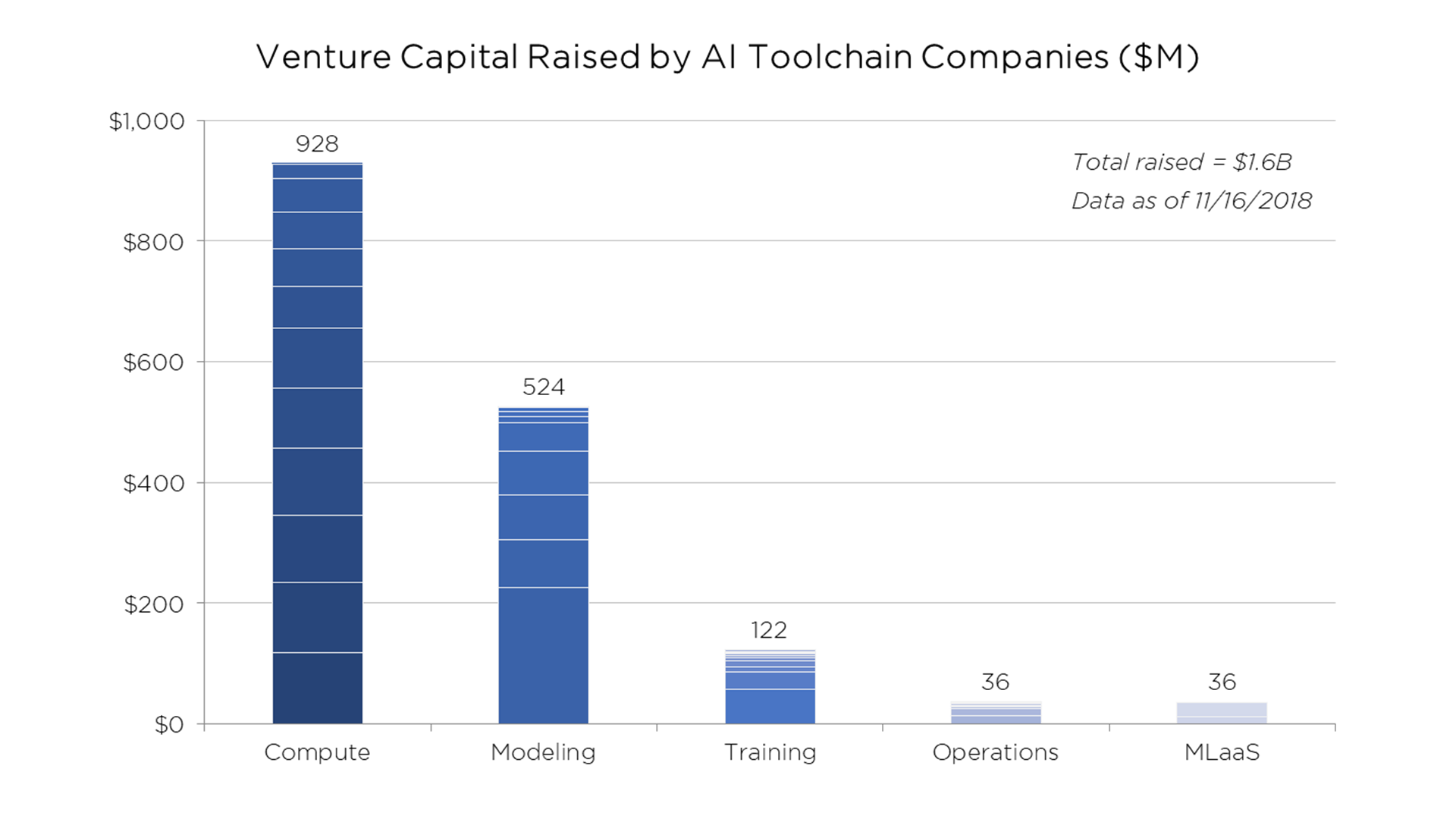

Entrepreneurs believe they can beat the incumbents to the punch. More than a dozen companies in this category have raised over $800 million in venture capital. Most of these companies are pursuing new architectures for data center chips used for training and inference. Some others are experimenting with unique power/performance profiles that target edge devices, a key growth area not yet dominated by large vendors.

Few (if any) startups have delivered a commercial AI chip so far. Going to market will be difficult, requiring substantial support from device manufacturers, framework developers and investors. But the opportunity is lucrative. Competition in AI compute technologies will likely continue to heat up.

Modeling: Frameworks are mature, but development environments are works-in-progress

Innovative new chips need intuitive programming interfaces and widespread developer support to succeed.

AI frameworks have already reached a reasonably mature state, in some ways outpacing the development of the underlying chips. Tensorflow is emerging as a de facto standard, with a number of good alternatives including Pytorch and H2O.8 This is part of a long-running trend redefining the idea of a computing platform in both traditional and AI development: where developers once targeted a particular processor or operating system, they now target a framework instead.

Systems to help developers write models, however, have room to grow. Popular workbenches like Domino make it easy to access modeling tools and collaborate on projects. IDEs like RStudio and notebooks like Jupyter enable an iterative model construction process. These tools, though effective for their intended purposes, do not assist with unique AI needs, such as introspecting and interactively adjusting data.9 There is a big opportunity to build the “home screen” for AI developers.

Automation of the modeling process, likewise, is still more tailored to traditional data science, with DataRobot the apparent leader. Pioneering, AI-specific efforts like Google’s AutoML or TPOT are interesting experiments to test the level of automation developers find valuable. There is skepticism among some practitioners, though, that these types of solutions will work reliably or deliver major benefits.10 Automated modeling remains an area of ongoing technology and product research.

Training: The burning platform need

There is an old adage, from sci-fi writer Arthur C. Clarke, that “any sufficiently advanced technology is indistinguishable from magic.”

If there is magic in deep learning, it happens at the training step. Starting with just a few lines of code (a model definition), and without explicit human intervention, training produces a system capable of performing advanced computing tasks (e.g., classification) across a wide range of use cases.

Of course, magic is not necessarily a good thing. Training is mostly a trial-and-error process today. Results are notoriously difficult to predict or to reproduce, owing in part to the complex and non-obvious relationships between various layers of a neural network. Experiment management tools like Weights & Biases and Comet address this problem by tracking, comparing and visualizing training runs (“experiments”). This attempt to impose order and standards is much-needed, especially by larger AI teams, but no vendor has established a clear lead.

Training AI models also requires significant computing resources and highly skilled people to optimize the process. Resource management companies like SigOpt and Determined aim to make training more efficient, powerful and automated. Initial features include hyperparameter tuning, GPU sharing, cold/warm-starting and other advanced training paradigms. These companies are addressing some of today’s most difficult AI development problems and several are gaining significant traction with customers.

Finally, we can’t lose sight of the data. Deep learning and other AI techniques require large, carefully constructed training datasets, and building them often takes the majority of an AI developer’s time. Data generation companies like Figure Eight or Labelbox address this pain point via software tools, sometimes combined with human services, to annotate (or create) data and make it usable for AI training. Organizations also face increasing needs to standardize training data pipelines and address more nuanced issues like bias, auditability and privacy. Data management companies like Pachyderm and Iterative, sometimes called “data version control” or “git for data,” are working to provide this necessary functionality.

Training is currently the bottleneck in the AI stack for most organizations. The first large AI tooling companies may emerge here.

Operations: We don’t know what we don’t know

Traditional software teams have a robust set of tools to manage the operational aspects of the application lifecycle: test automation, code analysis, CI/CD, APM, A/B testing and many others.

Sophisticated companies like Google, Facebook and Uber have built similar capabilities for AI applications with in-house platforms. The average enterprise, however, lacks the resources and expertise to build these tools and will look to vendors to fill the gap.

Most startups in this category focus on the deployment and monitoring of models in a production setting. This is an area of clear need, analogous to the traditional programming world, that will likely attract substantial IT budgets in the future. The market, however, is still nascent. Many organizations are focused today on modeling and training. Nearly one third report having “no methodology” in place for AI development or lifecycle management.11 Vendors may need to educate their potential customers.

Looking further ahead, AI will also require unique operations systems to reach scaled production in many industries.

Model explainability addresses the “black box” nature of AI applications, helping users understand why a loan was rejected or a news item was promoted. This functionality is fast becoming a legal and/or market requirement across industries, particularly in financial services. Several data management and model deployment companies have made inroads on explainability via sophisticated management of AI pipelines. Finding a general solution, however, is considered an open area of research. DARPA has committed $75 million to an explainable AI (“XAI”) grand challenge that will report its first results in 2019. In the meantime, more practical systems around debugging, model introspection and general visibility represent nearer-term opportunities.

AI security or verification is another non-trivial, unsolved problem. Researchers have found that many AI models are relatively easy to deceive with adversarial data inputs. One team fooled self-driving car algorithms into ignoring stop signs using just a few stickers.12 Others showed this type of technique to be robust across various data types.13 Widespread deployment and acceptance of AI applications by regulatory authorities will require applications to behave as expected and in a safe way, within appropriate guardrails. This is a potentially lucrative opportunity for startups, whether in a horizontal or vertical business model.

ML-as-a-Service: Does one size fit all?

AI tooling companies face a persistent question from skeptics: “Why can’t Amazon/ Google/ Microsoft do the same thing?”

The cloud providers have a credible play in the ML-as-a-service category, including pre-trained models and tightly-integrated toolchains. These companies are well-positioned to sell tooling as a bundled service, since they already provide the infrastructure for many AI applications. They also have massive resources and demonstrated commitment to the AI market.

Cloud provider offerings have several downsides, though:

- Lock-in. Most tools published by cloud providers are designed to be used as a complete set on a particular cloud. This is at odds with many AI developers, who prefer to use bespoke stacks with best-in-class tools, and many corporate IT departments, who are pursuing hybrid or multi-cloud IT strategies.

- Wrong incentives. Cloud providers – especially Amazon and Google – have the primary incentive to sell more cloud services. Commercial software is not their main line of business and is unlikely to become so. Microsoft may prove the exception to this rule, given their past wins with developer tools and recent acquisition of Github.

- One size fits all. Market feedback suggests AI tooling from cloud providers is designed for simpler, more homogenous use cases. This is an advantage today, while most customers are early in their AI capabilities, but may become a liability as AI competency grows and deep learning moves closer to a general computing platform.

It would be a mistake to count out Amazon, Microsoft and Google. They will continue to expand and improve their offerings, addressing some of the current issues. But structural factors suggest they are unlikely to capture the full market, especially as AI becomes more popular and talent more evenly distributed.

ML-as-a-service startups like Floyd and Machinify, meanwhile, aim to beat the cloud providers at their own game, delivering ease-of-use without lock-in. This is an attractive value proposition for many developers and organizations just beginning their AI journeys. Whether these customers continue with an end-to-end approach as they become more sophisticated is a key question.

Conclusions

Seven of the world’s ten largest public companies are tech companies: Apple, Amazon, Microsoft, Alphabet, Facebook, Alibaba, Tencent.14

They have revolutionized industries like media, commerce and communications by building (or building on top of) today’s massive, global digital infrastructure.

Not coincidentally, these companies are also among the world’s most sophisticated AI organizations. They have built expert AI teams, absorbing leading academic labs in the process, and have demonstrated a substantial multiplier effect of AI on their core businesses. Most of these companies are also entering new markets, like transportation and home control, based on proprietary AI research.

For traditional enterprises, it is no longer enough to be “digital.” Software and online services are necessary, but not sufficient, to remain competitive. AI is the next battleground.

A rich ecosystem of startups is forming to address this need. Intelligent applications, built by vendors for a wide range of industries and corporate functions, are an important part of the equation. But enterprises must also create strong internal AI capabilities to generate long-term value.

The AI toolchain is ripe with opportunities to support business leaders – and engineers – in this journey, including:

- Bringing standards, scale and efficiency to the training process

- Creating the “home screen” for AI developers

- Explaining the predictions of advanced AI models and verifying behavior

- Building better chips for AI inference, especially at the edge

- Defining the product and GTM strategy to stitch these pieces together

If you are working on these or other hard AI problems, don’t hesitate to reach out.

Matt Bornstein invests in early-stage startups, many solving hard data and AI problems, with Blumberg Capital. Follow him on Linkedin at mattbornstein.

Thank you to John Fan, Shubho Sengupta, Mike del Balso, Tarin Ziyaee, Joey Zwicker, Alex Ratner and others who provided valuable feedback and support for this post.

Disclosure: Blumberg Capital is an investor in SigOpt and Pachyderm.

Fundraising Data (added 11/16/18, Pitchbook):

Methodology:

- The AI Toolchain focuses on new tools designed specifically to support AI applications and development workflows. It avoids conventional data science and big data companies, which play a critical role in AI development but are already well-known and are beginning to consolidate. Some conventional tools (e.g., Domino) are included due to a lack of new, purpose-built alternatives.

- The landscape also focuses primarily on commercial companies to illustrate the growth of AI tooling as an industry. Some open-source projects (e.g, Tensorflow) are too important to ignore, and other areas (e.g., explainability) have few commercial entrants thus far.

- Companies are clustered based on their core technological innovations and their place within the AI development workflow. Since this is a nascent market, the categories are not officially recognized by IT analysts like Gartner or Forrester. This is more art than science, and categories will likely evolve as the market matures.We included all the activity we know about, but please let us know if you have a suggestion or proposed update

Citations:

- https://singularityhub.com/2018/09/07/the-4-waves-of-ai-and-why-china-has-an-edge/

- https://www.oreilly.com/data/free/state-of-machine-learning-adoption-in-the-enterprise.csp

- https://info.algorithmia.com/enterprise-blog-state-of-machine-learning

- https://venturebeat.com/2017/10/24/lyfts-biggest-ai-challenge-is-getting-engineers-up-to-speed/

- https://www-01.ibm.com/common/ssi/cgi-bin/ssialias?htmlfid=POW03210USEN

- https://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf

- https://www.top500.org/news/intel-lays-out-new-roadmap-for-ai-portfolio/

- https://towardsdatascience.com/deep-learning-framework-power-scores-2018-23607ddf297a

- https://medium.com/@karpathy/software-2-0-a64152b37c35

- https://www.fast.ai/2018/07/23/auto-ml-3/

- https://www.oreilly.com/data/free/state-of-machine-learning-adoption-in-the-enterprise.csp

- https://arxiv.org/abs/1707.08945

- https://blog.openai.com/adversarial-example-research/

- https://en.wikipedia.org/wiki/List_of_public_corporations_by_market_capitalization#2018

Related Articles